Tracing without LangChain

LangSmith lets you instrument any LLM application, no LangChain required. This aids in debugging, evaluating, and monitoring your app, without needing to learn any particular framework's unique semantics.

LangSmith instruments your apps through run traces. Each trace is made of 1 or more "runs" representing key event spans in your app. Each run is a structured log with a name, run_type, inputs / outputs, start/end times, as well as tags and other metadata. Runs within a trace are nested, forming a hierarchy referred to as a "run tree."

In Python, LangSmith offers two ways to help manage run trees:

RunTreeobject: manages run data and communicates with the LangSmith platform. Thecreate_childmethod simplifies the creation and organization of child runs within the hierarchy.@traceabledecorator: automates the process of instrumenting function calls, handling the RunTree lifecycle for you.

This walkthrough guides you through creating a chat app and instrumenting it with the @traceable decorator. You will add tags and other metadata to your runs to help filter and organize the resulting traces. The chat app uses three 'chain' components to generate a text argument on a provided subject:

- An argument generation function

- Critique function

- Refine function

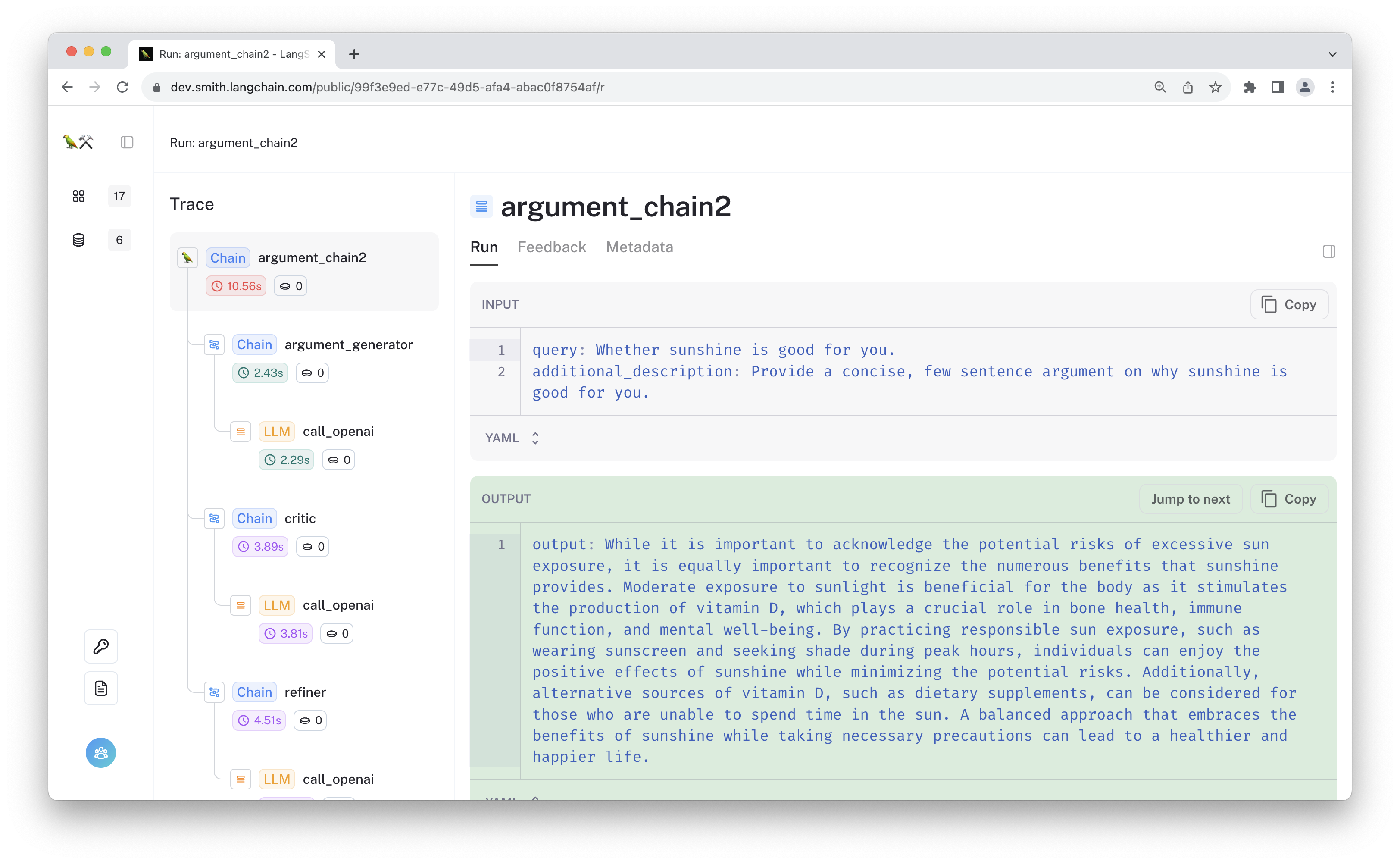

Each of these functions will call an openai llm function, and the whole series of calls will itself be organized beneath a parent argument_chain function, generating a trace like this:

Ready to build? Let's start!

Prerequisites

Let's first install the required packages. The app uses openai's SDK, and our tracing will use the langsmith SDK.

Install the latest versions to make sure you have all the required updates.

# %pip install -U langsmith > /dev/null

# %pip install -U openai > /dev/null

Next, configure the API Key in the environment to make sure traces are logged to your account.

import os

# Update with your API URL if using a hosted instance of Langsmith.

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

os.environ["LANGCHAIN_API_KEY"] = "YOUR API KEY" # Update with your API key

os.environ["LANGCHAIN_TRACING_V2"] = "true"

project_name = "YOUR PROJECT NAME" # Update with your project name

os.environ["LANGCHAIN_PROJECT"] = project_name # Optional: "default" is used if not set

os.environ["OPENAI_API_KEY"] = "YOUR OPENAI API KEY"

Using the decorator

Next, define your chat application. Use the @traceable decorator to automatically instrument your

function calls.

The decorator works by creating a run tree for you each time the function is called and inserting it within the current trace. The function inputs, name, and other information is then streamed to LangSmith. If the function raises an error or if it returns a response, that information is also added to the tree, and updates are patched to LangSmith so you can detect and diagnose sources of errors. This is all done on a background thread to avoid blocking your app's execution.

The app below combines three runs with the "chain" run_type. Run types can be thought of as a special type of tags to help index and visualize the logged information. Each of our "chain" runs call an function typed as an "llm" run. These correspond LLM or chat model calls. We recommend you type most functions as chain runs, as these are the most general-purpose.

from datetime import datetime

from typing import List, Optional, Tuple

from openai import OpenAI

from langsmith.run_helpers import traceable

openai_client = OpenAI()

# We will label this function as an 'llm' call to better organize it

@traceable(run_type="llm")

def call_openai(

messages: List[dict], model: str = "gpt-3.5-turbo", temperature: float = 0.0

) -> str:

return openai_client.chat.completions.create(

model=model,

messages=messages,

temperature=temperature,

)

# The 'chain' run_type can correspond to any function and is the most common

@traceable(run_type="chain")

def argument_generator(query: str, additional_description: str = "") -> str:

return (

call_openai(

[

{

"role": "system",

"content": f"You are a debater making an argument on a topic."

f"{additional_description}"

f" The current time is {datetime.now()}",

},

{"role": "user", "content": f"The discussion topic is {query}"},

]

)

.choices[0]

.message.content

)

@traceable(run_type="chain")

def critic(argument: str) -> str:

return (

call_openai(

[

{

"role": "system",

"content": f"You are a critic."

"\nWhat unresolved questions or criticism do you have after reading the following argument?"

"Provide a concise summary of your feedback.",

},

{"role": "system", "content": argument},

]

)

.choices[0]

.message.content

)

@traceable(run_type="chain")

def refiner(

query: str, additional_description: str, current_arg: str, criticism: str

) -> str:

return (

call_openai(

[

{

"role": "system",

"content": f"You are a debater making an argument on a topic."

f"{additional_description}"

f" The current time is {datetime.now()}",

},

{"role": "user", "content": f"The discussion topic is {query}"},

{"role": "assistant", "content": current_arg},

{"role": "user", "content": criticism},

{

"role": "system",

"content": "Please generate a new argument that incorporates the feedback from the user.",

},

]

)

.choices[0]

.message.content

)

@traceable(run_type="chain")

def argument_chain(query: str, additional_description: str = "") -> str:

argument = argument_generator(query, additional_description)

criticism = critic(argument)

return refiner(query, additional_description, argument, criticism)

Now call the chain. If you set up your API key correctly at the start of this notebook, all the results should be traced to LangSmith. We will prompt the app to generate an argument that sunshine is good for you.

result = argument_chain(

"Whether sunshine is good for you.",

additional_description="Provide a concise, few sentence argument on why sunshine is good for you.",

)

print(result)

Sunshine, when enjoyed responsibly, offers numerous benefits for overall health and well-being. It is a natural source of vitamin D, which plays a vital role in bone health, immune function, and mental well-being. While excessive sun exposure can lead to sunburns and increase the risk of skin damage and cancer, moderate and safe sun exposure, along with proper sun protection measures, can help maximize the benefits while minimizing the risks. It is important to strike a balance and make informed choices to harness the positive effects of sunshine while taking necessary precautions to protect our skin.

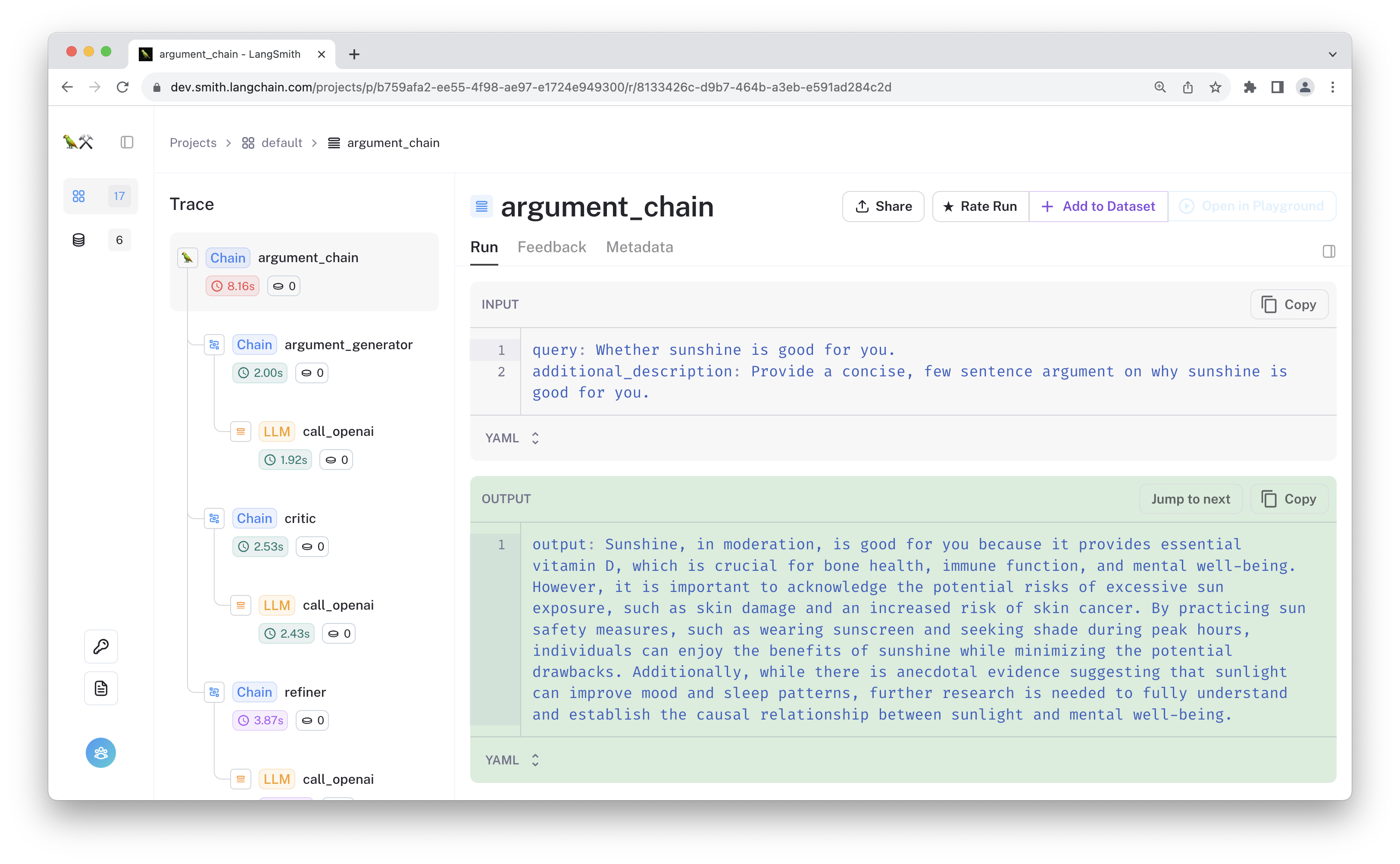

Working with runs

The above is all you need to save your app traces to LangSmith! You can try changing the functions or even raising errors in the above code to see how it's visualized in LangSmith.

The decorator does all this work behind the scenes, but you may want to be able to actually use the run ID for other things like monitoring user feedback or logging to another destination. You can easily do this by modifying your function signature to accept a run_tree keyword argument. Doing so will instuct the @traceable decorator to inject the current run tree object into your function. This can be useful if you want to:

- Add user feedback to the run

- Inspect or save the run ID or its parent

- Manually log child runs or their information to another destination

- Continue a trace in other functions that may be called within a separate thread or process pool (or even on separate machines)

Below, our argument_chain2 function is identical to the previous one except that we return the ID of the run_tree for use outside the function context.

from typing import Tuple

from uuid import UUID

from langsmith import RunTree

@traceable(run_type="chain")

def argument_chain2(

query: str, *, additional_description: str = "", run_tree: RunTree

) -> Tuple[str, UUID]:

argument = argument_generator(query, additional_description)

criticism = critic(argument)

refined_argument = refiner(query, additional_description, argument, criticism)

return refined_argument, run_tree.id

# We don't need to pass in the `run_tree` when calling the function. It will be injected

# by the decorator

result, run_id = argument_chain2(

"Whether sunshine is good for you.",

additional_description="Provide a concise, few sentence argument on why sunshine is good for you.",

)

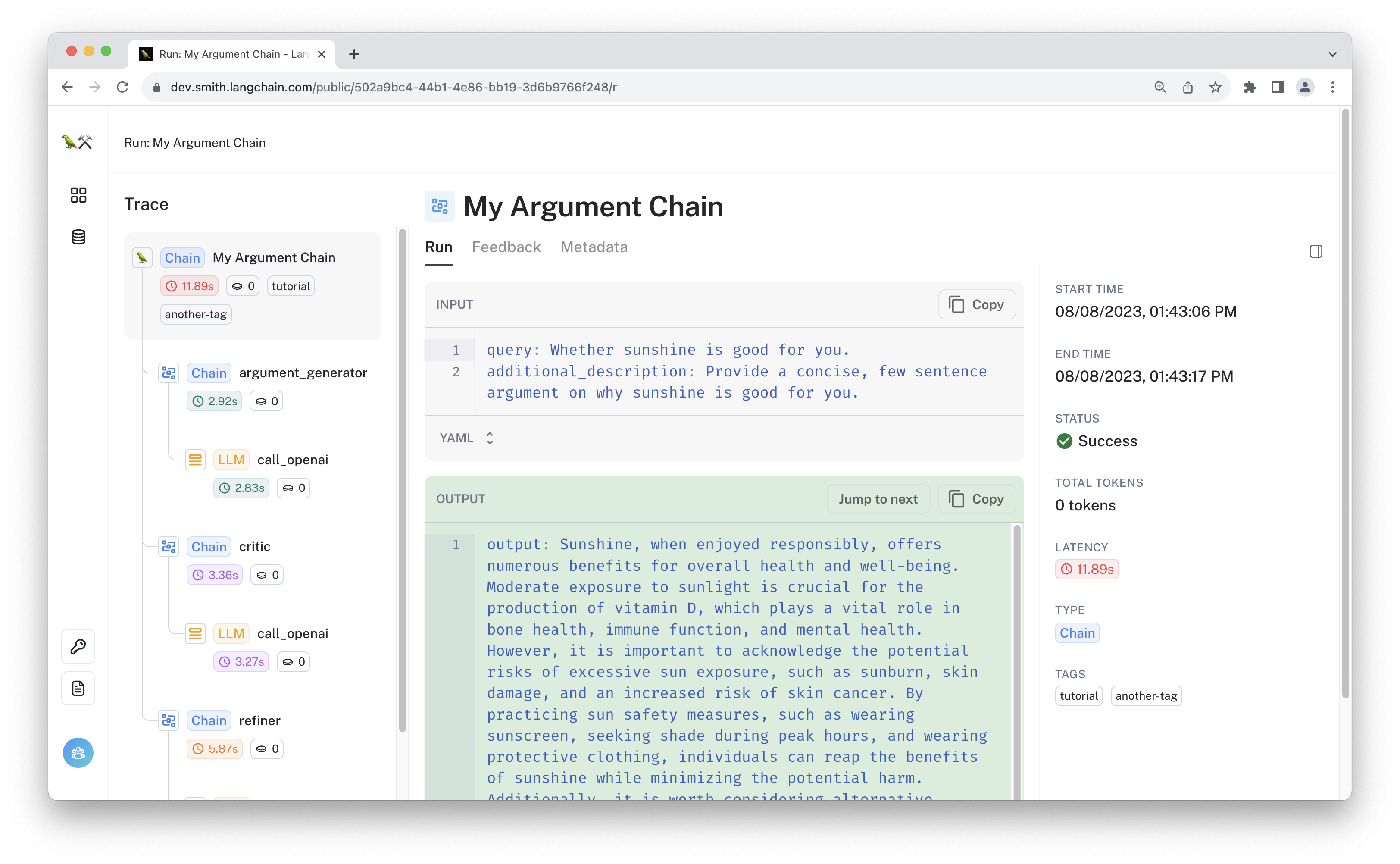

With the run ID, you can do things like log feedback from a user after the run is completed, or you can create a public link to share. We will do both below.

from langsmith import Client

client = Client()

client.create_feedback(

run_id,

"user_feedback",

score=0.5,

correction={"generation": "Sunshine is nice. Full stop."},

)

Feedback(id=UUID('487f90d9-90fb-445c-a800-1bef841e016e'), created_at=datetime.datetime(2023, 10, 26, 17, 46, 7, 955301, tzinfo=datetime.timezone.utc), modified_at=datetime.datetime(2023, 10, 26, 17, 46, 7, 955316, tzinfo=datetime.timezone.utc), run_id=UUID('5941500c-7f00-4081-854d-59bdf358e3ae'), key='user_feedback', score=0.5, value=None, comment=None, correction={'generation': 'Sunshine is nice. Full stop.'}, feedback_source=FeedbackSourceBase(type='api', metadata={}))

Configuring traces

One way to make your application traces more useful or actionable is to tag or add metadata to the logs. That way you can do things like track the version of your code or deployment environment in a single project.

The traceable decorator can be configured to add additional information such as:

- string tags

- arbitrary key-value metadata

- custom trace names

- manually-specified run ID

Below is an example.

# You can add tags and metadata (or even the project name) directly in the decorator

@traceable(

run_type="chain",

name="My Argument Chain",

tags=["tutorial"],

metadata={"githash": "e38f04c83"},

)

def argument_chain_3(query: str, additional_description: str = "") -> str:

argument = argument_generator(query, additional_description)

criticism = critic(argument)

return refiner(query, additional_description, argument, criticism)

This information can also be added when the function is called. This is done by passing a dictionary to the langsmith_extra argument.

Below, let's manually add some tags and the UUID for the run. We can then use this UUID in the same way as before.

from uuid import uuid4

# You can manually specify an ID rather than letting

# the decorator generate one for you

requested_uuid = uuid4()

result = argument_chain_3(

"Whether sunshine is good for you.",

additional_description="Provide a concise, few sentence argument on why sunshine is good for you.",

# You can also add tags, metadata, or the run ID directly via arguments to the langsmith_extra argument

# at all-time.

langsmith_extra={

"tags": ["another-tag"],

"metadata": {"another-key": 1},

"run_id": requested_uuid,

},

)

# We can log feedback for the run directly since we've controlled the ID it's assuming

client.create_feedback(

requested_uuid, "user_feedback", score=1, source_info={"origin": "example notebook"}

)

Feedback(id=UUID('5451225e-9ca8-473f-b774-d571b6f0f15b'), created_at=datetime.datetime(2023, 10, 26, 17, 47, 2, 163360, tzinfo=datetime.timezone.utc), modified_at=datetime.datetime(2023, 10, 26, 17, 47, 2, 163366, tzinfo=datetime.timezone.utc), run_id=UUID('67838724-6b14-4648-bb7a-3def0684dcdd'), key='user_feedback', score=1, value=None, comment=None, correction=None, feedback_source=FeedbackSourceBase(type='api', metadata={'origin': 'example notebook'}))

Now you can navigate to the run with the requested UUID to see the simulated "user feedback".

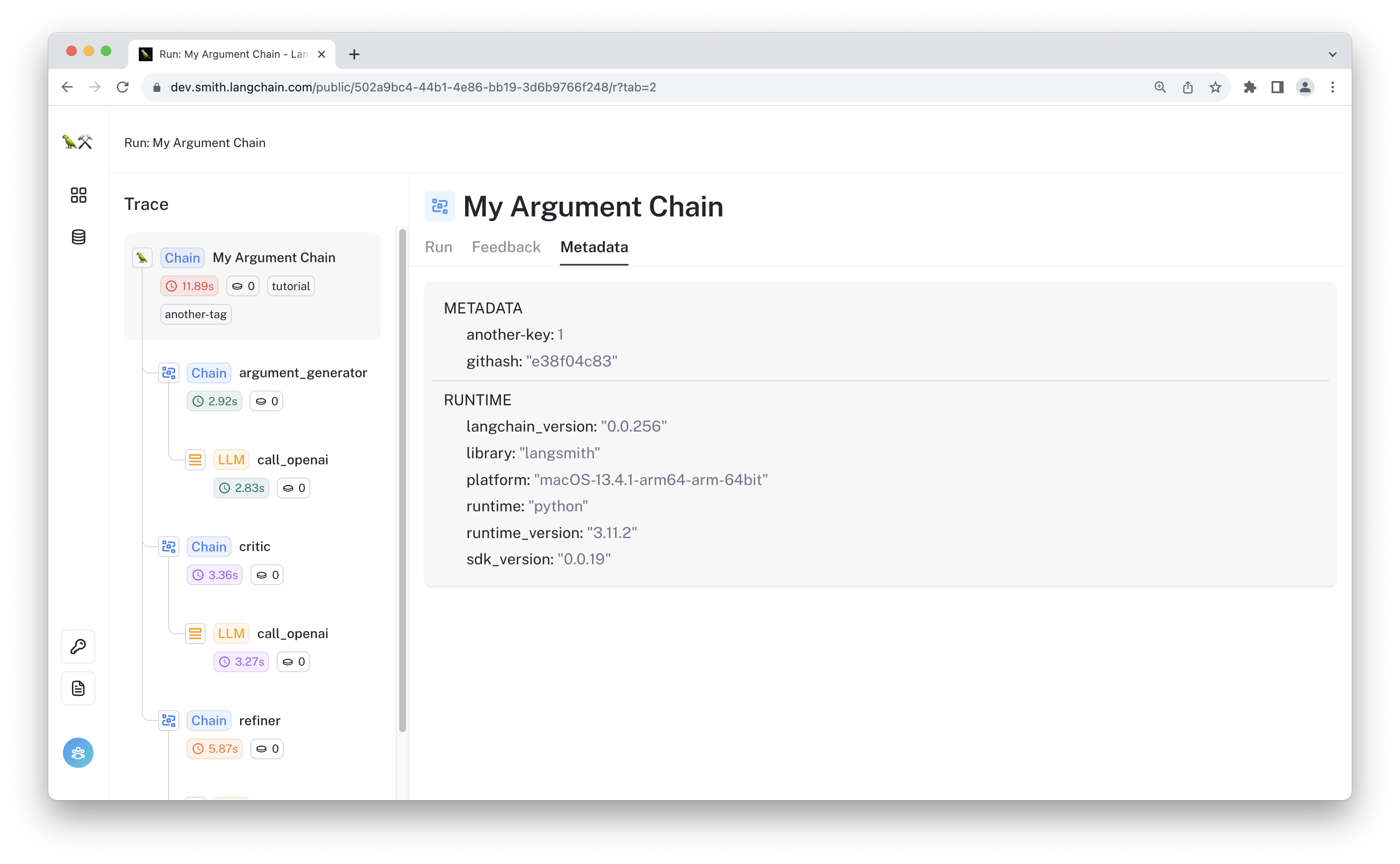

Clicking in to the 'Metadata' tab, you can see the metadata has been stored for the trace.

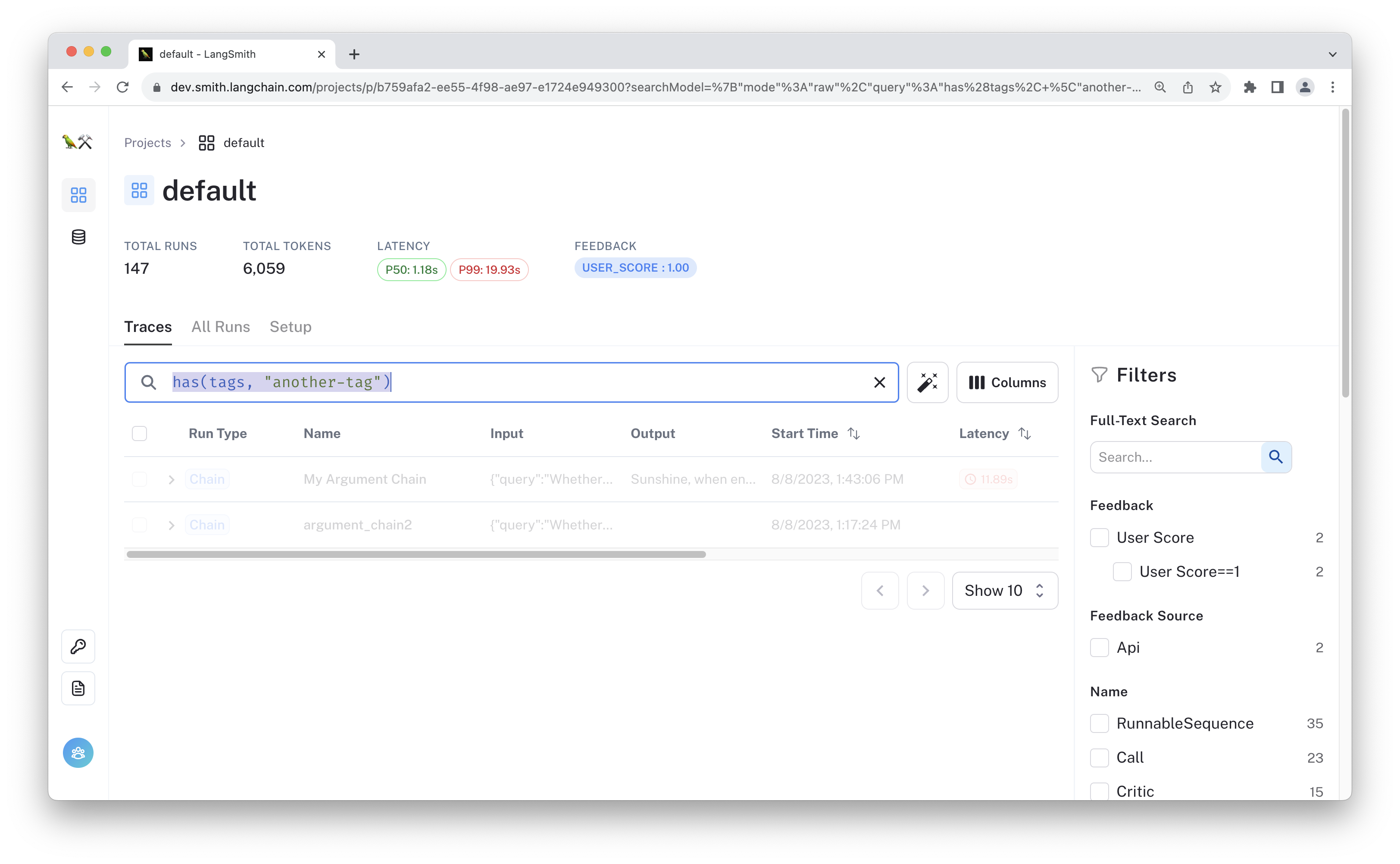

Once you've stored these tagged runs, you can filter and search right in the web app by clicking on the suggested filters or by writing out the query in the search bar:

Recap

In this walkthrough, you made an example LLM application and instrumented it using the @traceable decorator from the LangSmith SDK.

You also added tags and metadata and even logged feedback to the runs. The traceable decorator is a light-weight and flexible way to start debugging and monitoring your application.