Tracing via the REST API

It's likely that your production LLM application is written in a language other than Python or JavaScript. In this case, you can use the REST API to log runs and take advantage of LangSmith's tracing and monitoring functionality. The OpenAPI spec for posting runs can be found here.

LangSmith tracing is built on "runs", which are analogous to traces and spans in OpenTelemetry. The basics of logging a run to LangSmith looks like:

- Submit a POST request to "https://api.smith.langchain.com/runs"

- JSON body of the request must have a name, run_type, inputs, and any other desired information.

- An "x-api-key" header must be provided to authenticate, using a valid API key created in the LangSmith app

In the following walkthrough, you will do the following:

- Log a generic chain run to LangSmith via the REST API

- Add additional tags, metadata, and other information to the run

- Log LLM (chat and completion model) runs to calculate tokens and render chat messages

- Log nested runs by setting the parent run id

Let's get started!

Prerequisites

Before moving on, make sure to create an account in LangSmith, and create an API key. Once that is done, you may continue. There are no dependencies apart from Python for this tutorial.

import os

# Update with your API URL if using a hosted instance of Langsmith.

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

os.environ["LANGCHAIN_API_KEY"] = "YOUR API KEY" # Update with your API key

project_name = "YOUR PROJECT NAME" # Update with your project name

Logging a Run

Below is a minimal example of how to create a run using the REST API. Since each run represents the start and end of a function call (or other unit of work), we typically log the run in two calls:

- First create the run by submitting a POST request at the beginning of the function call

- Then update the run via a PATCH request at the end.

This ensures the runs appear in a timely manner, even for long-running operations.

The following example demonstrates this approach.

import datetime

import uuid

import requests

_LANGSMITH_API_KEY = os.environ["LANGCHAIN_API_KEY"]

run_id = str(uuid.uuid4())

res = requests.post(

"https://api.smith.langchain.com/runs",

json={

"id": run_id,

"name": "MyFirstRun",

"run_type": "chain",

"start_time": datetime.datetime.utcnow().isoformat(),

"inputs": {"text": "Foo"},

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

# ... do some work ...

requests.patch(

f"https://api.smith.langchain.com/runs/{run_id}",

json={

"outputs": {"my_output": "Bar"},

"end_time": datetime.datetime.utcnow().isoformat(),

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

<Response [200]>

Here we manually define the run's ID to reuse in the PATCH request. It is passed through the json body when creating the run and as a path parameter when updating. We have also authenticated to LangSmith by passing our API key in the "x-api-key" header.

You can find the logged run by navigating to the projects page in LangSmith and clicking on your default project, there you will see a barebones "chain" run with the name "MyFirstRun". It should look something like the following:

<a href="https://smith.langchain.com/public/8fbe61f2-6d23-47f4-b6d7-6946322aad48/r"><img src="./static/minimal.png" alt="minimal trace example" style="width:75%"></a>

Not much information is included, since we haven't added any child runs, tags, or metadata yet. It is marked as a "success" since we patched the end time without errors.

In addition to the values included above, you can also provide any of the following information in the POST request:

{

"name": "string",

"inputs": {},

"run_type": "string",

"start_time": "2019-08-24T14:15:22Z", # UTC timestamp in ISO format

"end_time": "2019-08-24T14:15:22Z", # UTC timestamp in ISO format

"extra": {},

"error": "string",

"outputs": {},

"parent_run_id": "f8faf8c1-9778-49a4-9004-628cdb0047e5",

"events": [

{}

],

"tags": [

"string"

],

"id": "497f6eca-6276-4993-bfeb-53cbbbba6f08",

"session_id": "1ffd059c-17ea-40a8-8aef-70fd0307db82",

"session_name": "string", # This is the name of the PROJECT. "default" if not specified. Sessions are the old name for projects.

"reference_example_id": "9fb06aaa-105f-4c87-845f-47d62ffd7ee6"

}

This can also be found in the API documentation.

Lets look at a more complex chain example:

import platform

run_id = str(uuid.uuid4())

requests.post(

"https://api.smith.langchain.com/runs",

json={

"id": run_id,

"name": "MySecondRun",

"run_type": "chain",

"inputs": {"text": "Foo"},

"start_time": datetime.datetime.utcnow().isoformat(),

"session_name": project_name,

"tags": ["langsmith", "rest", "my-example"],

"extra": {

"metadata": {"my_key": "My value"},

"runtime": {

"platform": platform.platform(),

},

},

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

# ... do some work ...

events = []

# Events like new tokens and retries can be added

events.append({"event_name": "retry", "reason": "never gonna give you up"})

events.append({"event_name": "new_token", "value": "foo"})

res = requests.patch(

f"https://api.smith.langchain.com/runs/{run_id}",

json={

"end_time": datetime.datetime.utcnow().isoformat(),

"outputs": {"generated": "Bar"},

"events": events,

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

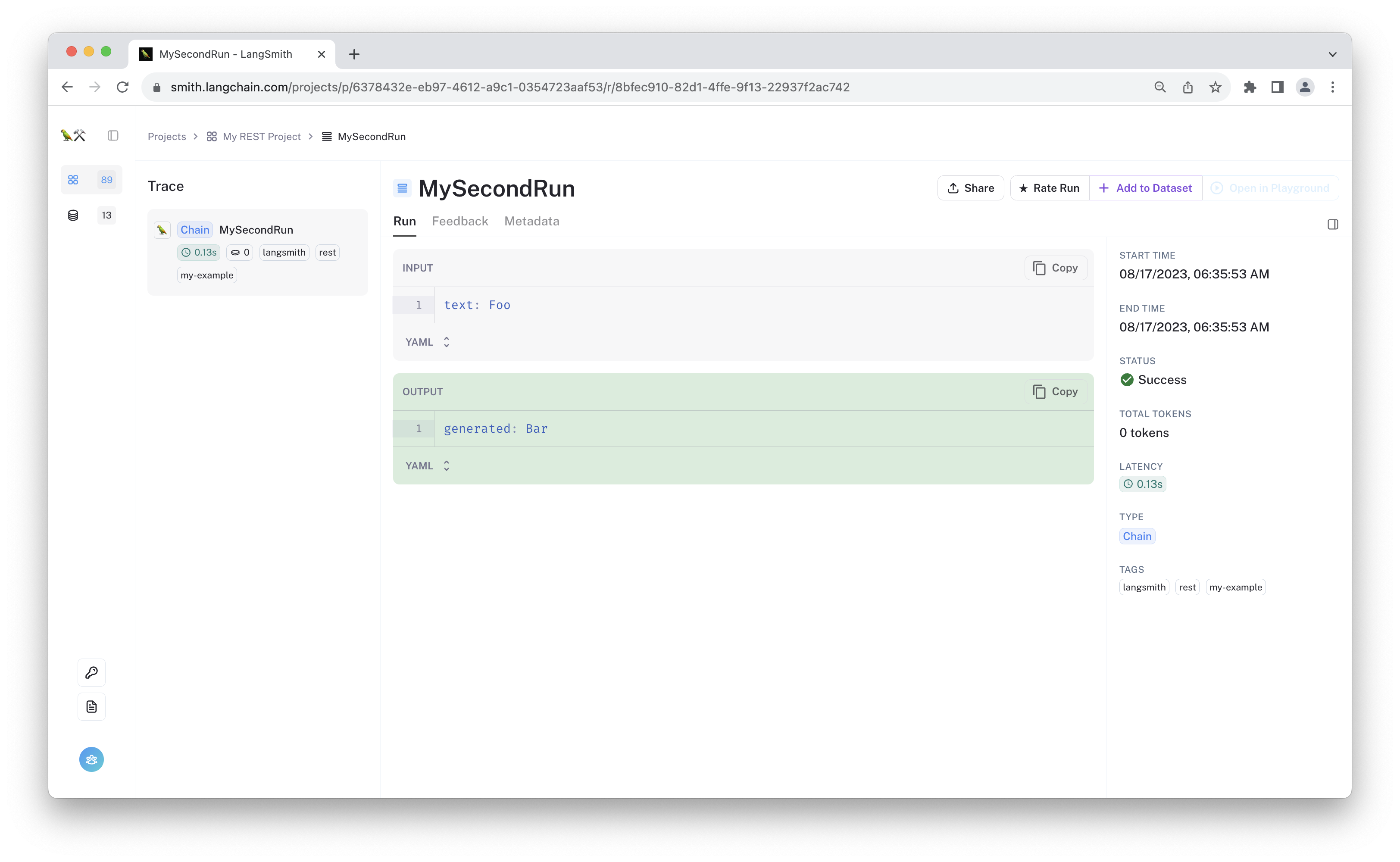

In this example, we have logged a chain run to a new project called "My REST Project" by passing the "session_name" field. This will create a new project if a project of that name does not already exist.

We also tagged the run with the langsmith, rest, and my-example tags, and added metadata and runtime information to the run.

Finally, we added a retry event and a new token event to the run. Events can be used to log additional minor information about what occurred during a run (such as streaming and retry events), when that information doesn't merit an entire child run and is not the final output of the run.

Below is an example screenshot of what the logged trace from the example above looks like. The new run now has inputs and outputs, a latency calculation, and tags.

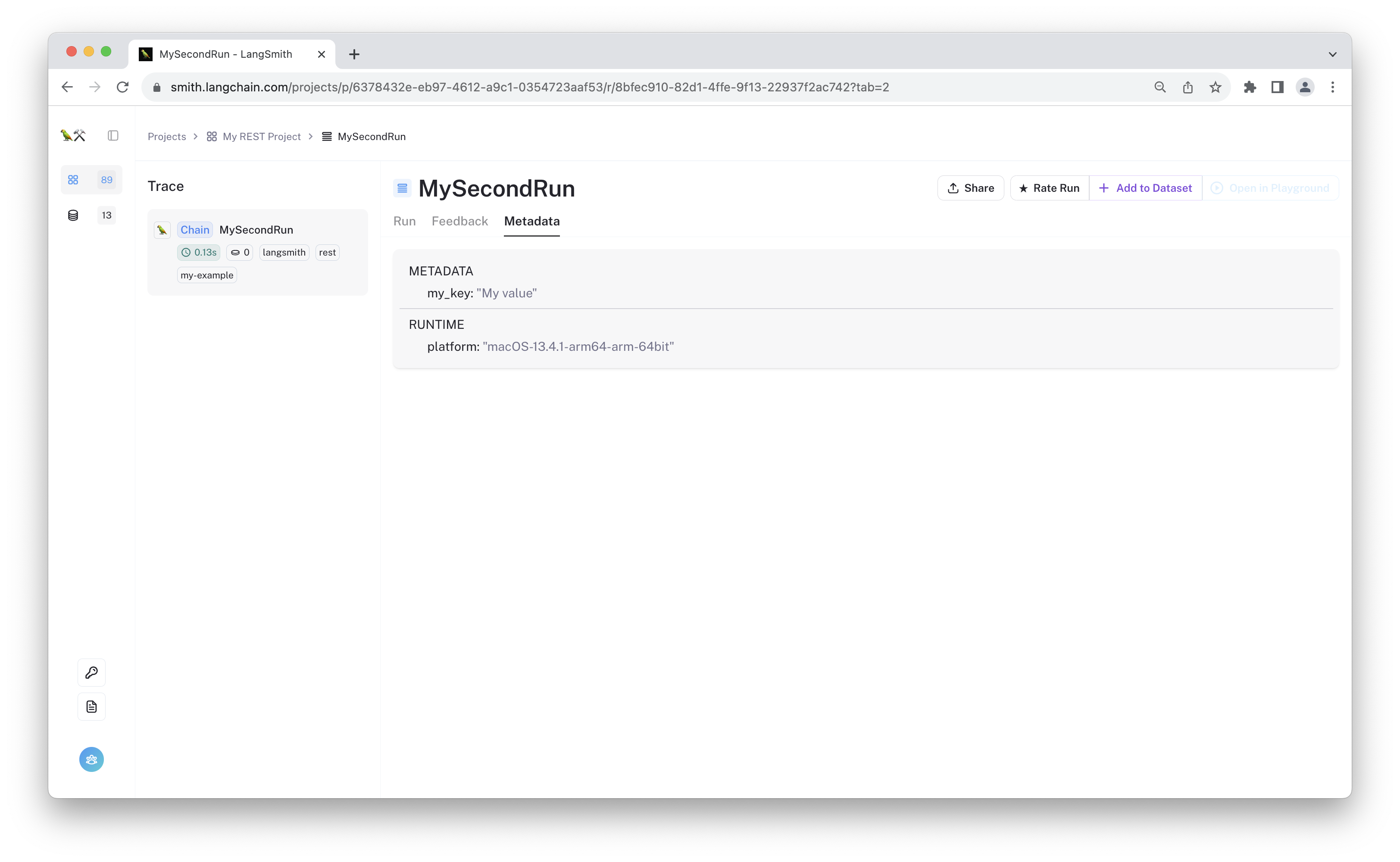

To see the logged metadata and other runtime information you saved in the trace above, you can navigate to the "metadata" tab:

Logging LLM Runs

The "chain" runs above are versatile and can represent any function in your application. However, some of LangSmith's functionality is only available for LLM runs. Correctly formatted runs with the run_type of "llm" let you:

- Track token usage

- Render "prettier" chat or completion message formats for better readability.

LangSmith supports OpenAI's llm message schema, so you can directly log the inputs and outputs of your call to any openai-compatible API without having to convert it to a new format.

We will show examples below.

Logging LLM Chat Messages

To log messages in the "chat" model format (role and message dictionaries), LangSmith expects the following format:

- Provide

messages: [{"role": string, "content": string}]as a key-value pair in the inputs - Provide

choices: [{"message": {"role": string, "content": string}]as a key-value pair in the outputs.

For function calling, you can also pass a functions=[...] key-value pair in the inputs, and include a function_call: {"name": string, "arguments": {}} key-value pair in the response message choice.

Additional parameters, such as the temperature, model, etc. ought to be passed in as inputs and will be registered as "invocation_params" by LangSmith. The following example shows how to log a chat model run with functions inputs:

run_id = str(uuid.uuid4())

requests.post(

"https://api.smith.langchain.com/runs",

json={

"id": run_id,

"name": "MyChatModelRun",

"run_type": "llm",

"inputs": {

"messages": [{"role": "user", "content": "What's the weather in SF like?"}],

# Optional

"model": "text-davinci-003",

"functions": [

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

},

},

"required": ["location"],

},

}

],

# You can add other invocation paramers as k-v pairs

"temperature": 0.0,

},

"start_time": datetime.datetime.utcnow().isoformat(),

"session_name": project_name,

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

requests.patch(

f"https://api.smith.langchain.com/runs/{run_id}",

json={

"end_time": datetime.datetime.utcnow().isoformat(),

"outputs": {

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

# Content is whatever string response the

# model generates

"content": "Mostly cloudy.",

# Function call is the function invocation and arguments

# as a string

"function_call": {

"name": "get_current_weather",

"arguments": '{\n"location": "San Francisco, CA"\n}',

},

},

"finish_reason": "function_call",

}

],

},

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

<Response [200]>

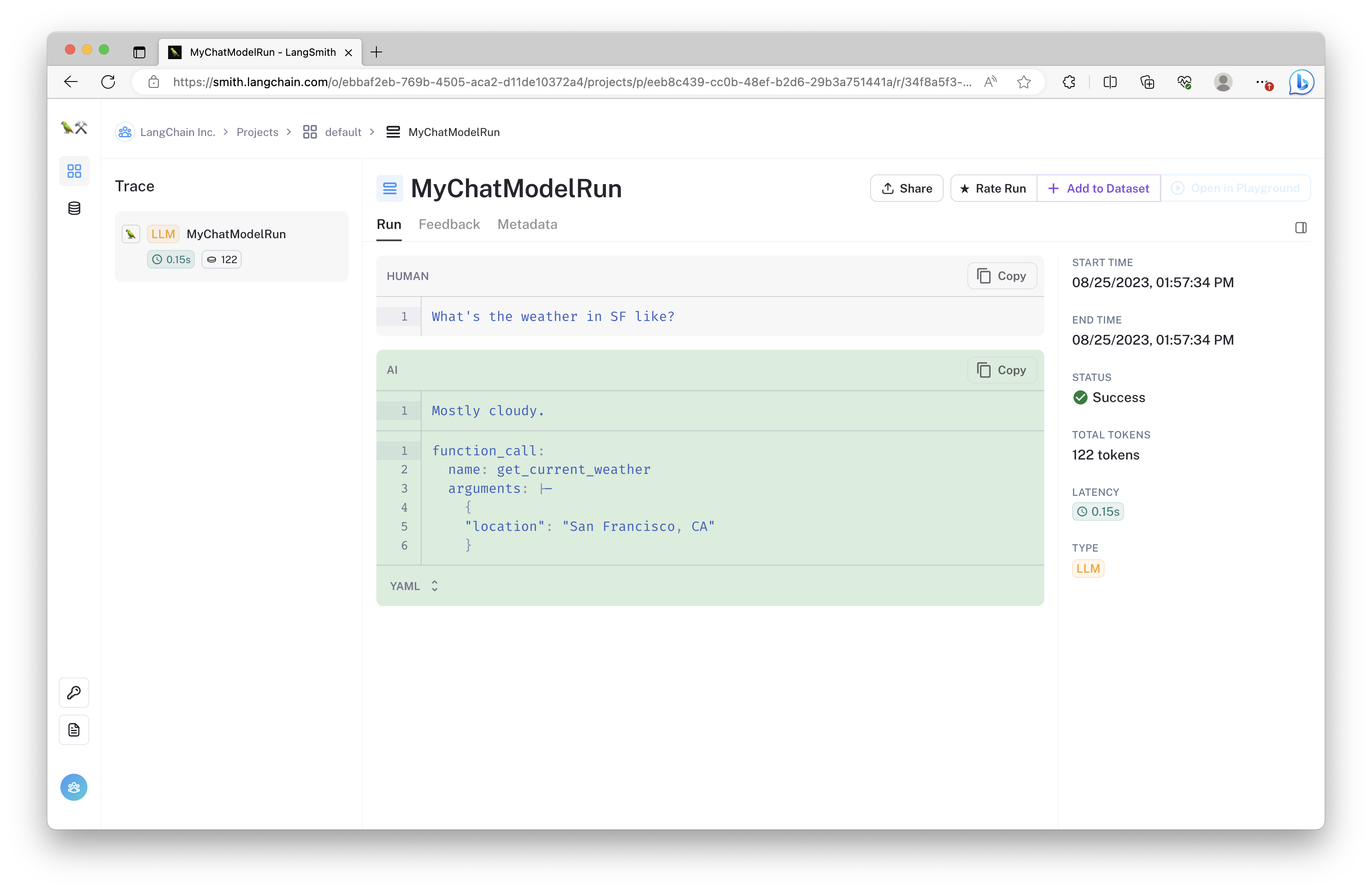

When viewed in LangSmith, the run will look something like the one below, with the human, AI, and other chat messages all given their own cards, and with the token counts visible on the right.

Logging "Completions" Models

To log in the "completions" format (string in, string out), LangSmith expects the following format:

- Name the run "openai.Completion.create" or "openai.Completion.acreate"

- Provide

prompt: stringas a key-value pair in the inputs - Provide

choices: [{"text": string}]key-value pair in the outputs.

run_id = str(uuid.uuid4())

requests.post(

"https://api.smith.langchain.com/runs",

json={

"id": run_id,

"name": "MyLLMRun",

"run_type": "llm",

"inputs": {

"prompt": "Hi there!",

# Optional: model or engine name, and other invocation params

"engine": "text-davinci-003",

"temperature": 0.0,

},

"start_time": datetime.datetime.utcnow().isoformat(),

"session_name": project_name,

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

requests.patch(

f"https://api.smith.langchain.com/runs/{run_id}",

json={

"end_time": datetime.datetime.utcnow().isoformat(),

"outputs": {

"choices": [

{

"text": "\nMy name is Polly and I'm excited to talk to you!",

"index": 0,

"logprobs": None,

"finish_reason": "stop",

},

]

},

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

<Response [200]>

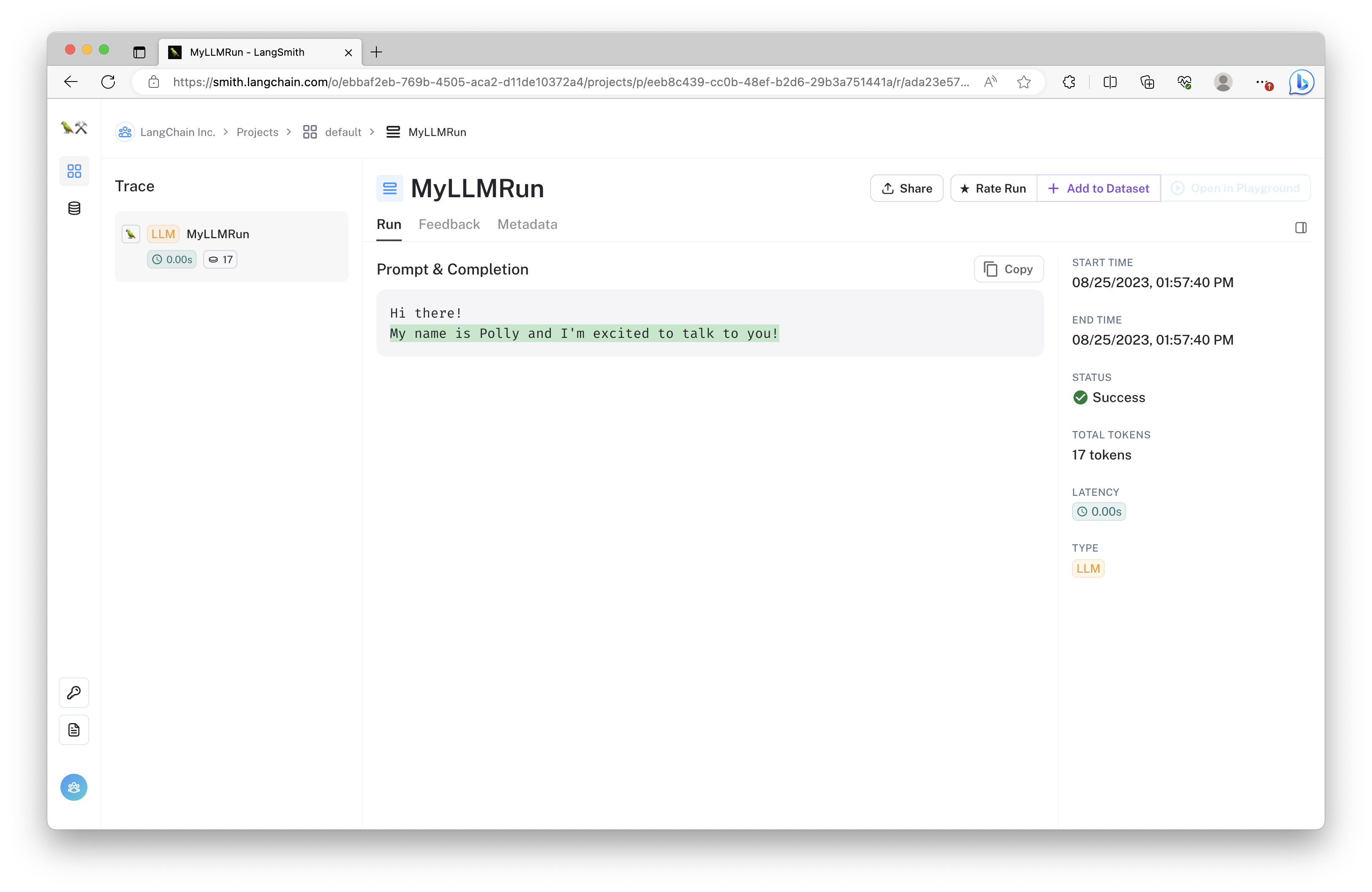

The completion model output looks like the example below. Once again, the token counts are indicated on the right, and the completion output is highlighted in green following the origina prompt.

Nesting Runs

The above examples work great for linear logs, but it's likely that your application involves some amount of nested execution. It's a lot easier to debug a complex chain if the logs themselves contain the required associations. There are currently two bits of complexity in doing so. We plan to relax the execution order requirement at some point in the future:

- You must include a

parent_run_idin your JSON body. - You must track the

execution_orderof the child run for it to be rendered correctly in the trace.

Below is an updated example of how to do this. We will create a new RunLogger class that manages the execution order state for us.

import uuid

from typing import Optional

class RunLogger:

def post_run(

self, data: dict, name: str, run_id: str, parent_run_id: Optional[str] = None

) -> None:

requests.post(

"https://api.smith.langchain.com/runs",

json={

"id": run_id,

"name": name,

"run_type": "chain",

"parent_run_id": parent_run_id,

"inputs": data,

"start_time": datetime.datetime.utcnow().isoformat(),

"session_name": project_name,

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

def patch_run(

self, run_id: str, output: Optional[dict] = None, error: Optional[str] = None

) -> None:

requests.patch(

f"https://api.smith.langchain.com/runs/{run_id}",

json={

"error": error,

"outputs": output,

"end_time": datetime.datetime.utcnow().isoformat(),

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

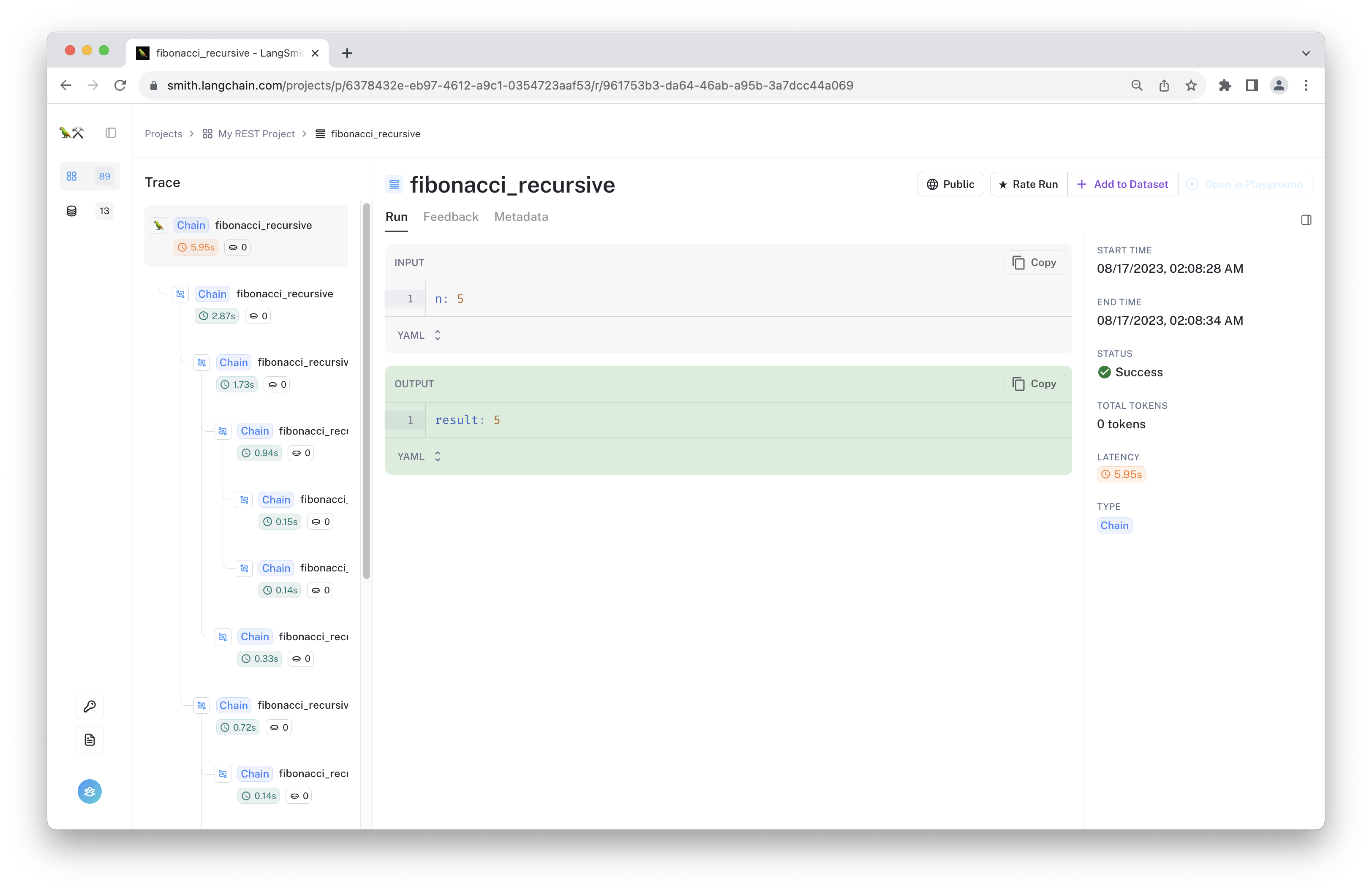

To demonstrate how this works, we will create a simple fibonacci function and log each call as a "chain" run.

logger = RunLogger()

def fibonacci(n: int, depth: int = 0, parent_run_id: Optional[str] = None) -> int:

run_id = str(uuid.uuid4())

logger.post_run(

{"n": n}, f"fibonacci_recursive", run_id, parent_run_id=parent_run_id

)

try:

if n <= 1:

result = n

else:

result = fibonacci(n - 1, depth + 1, parent_run_id=run_id) + fibonacci(

n - 2, depth + 1, parent_run_id=run_id

)

logger.patch_run(run_id, output={"result": result})

return result

except Exception as e:

logger.patch_run(run_id, error=str(e))

raise

fibonacci(5)

5

This should generate a trace similar to the one shown below:

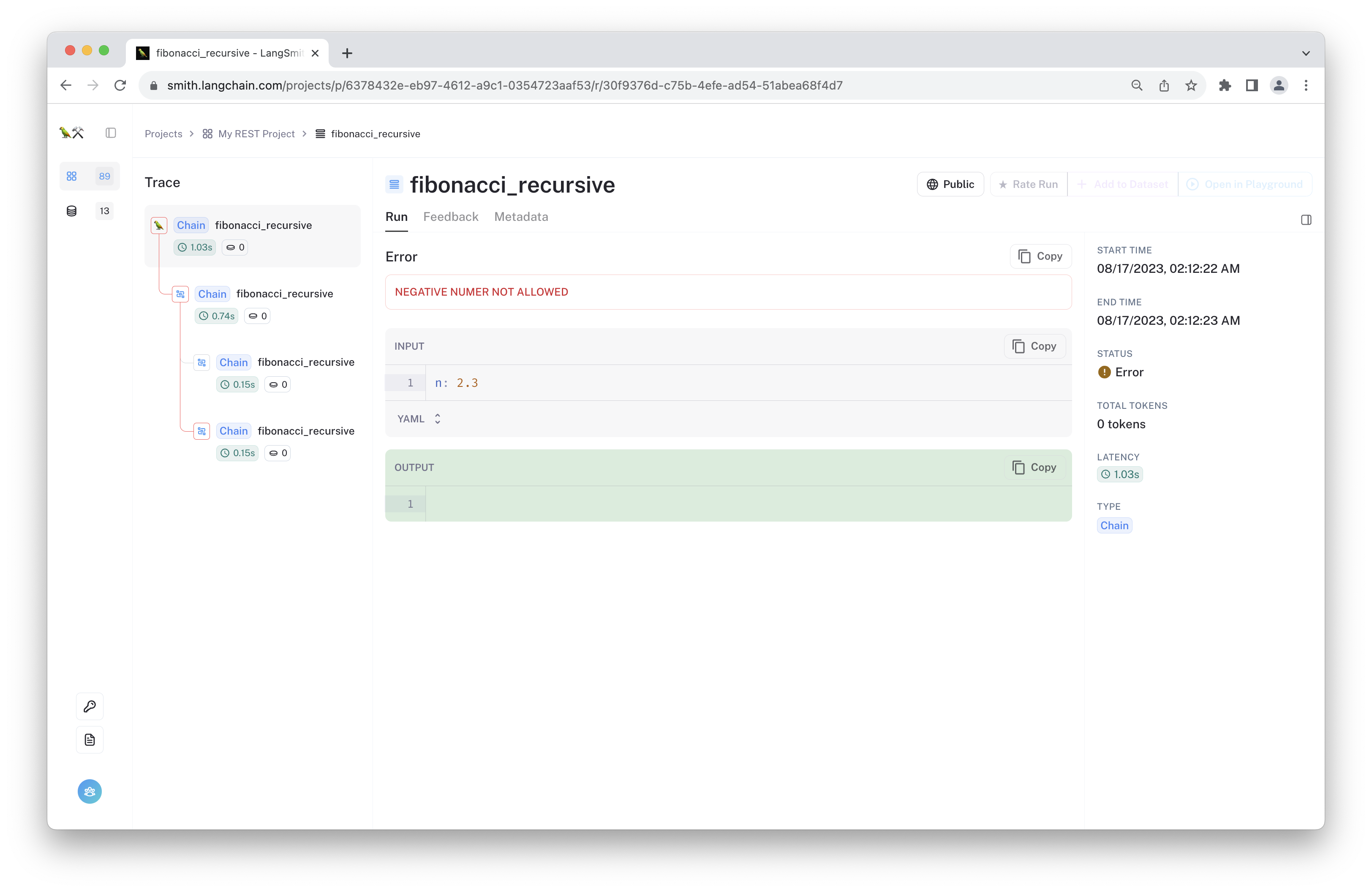

def fibonacci(n: int, depth: int = 0, parent_run_id: Optional[str] = None) -> int:

run_id = str(uuid.uuid4())

logger.post_run(

{"n": n}, f"fibonacci_recursive", run_id, parent_run_id=parent_run_id

)

try:

if n < 0:

raise ValueError("NEGATIVE NUMER NOT ALLOWED")

if n <= 1:

result = n

else:

result = fibonacci(n - 1, depth + 1, parent_run_id=run_id) + fibonacci(

n - 2, depth + 1, parent_run_id=run_id

)

logger.patch_run(run_id, output={"result": result})

return result

except Exception as e:

logger.patch_run(run_id, error=str(e))

raise

# We will show what the trace looks like with an error

try:

fibonacci(2.3)

except:

pass

The resulting run should look something like the following. The errors are propagated up the call hierarchy so you can easily see where in the execution the chain failed.

Single Requests

If you want to reduce the number of requests you make to LangSmith, you can log runs in a single request. Just be sure to include the outputs or error and fix the end_time all in the post request.

Below is an example that logs the completion LLM run from above in a single call.

requests.post(

"https://api.smith.langchain.com/runs",

json={

"name": "MyLLMRun",

"run_type": "llm",

"inputs": {

"prompt": "Hi there!",

# Optional: model or engine name, and other invocation params

"engine": "text-davinci-003",

"temperature": 0.0,

},

"outputs": {

"choices": [

{

"text": "\nMy name is Polly and I'm excited to talk to you!",

"index": 0,

"logprobs": None,

"finish_reason": "stop",

},

]

},

"session_name": project_name,

"start_time": datetime.datetime.utcnow().isoformat(),

"end_time": datetime.datetime.utcnow().isoformat(),

},

headers={"x-api-key": _LANGSMITH_API_KEY},

)

<Response [200]>

Conclusion

In this walkthrough, you used the REST API to log chain and LLM runs to LangSmith and reviewed the resulting traces. This is currently the only way to log runs to LangSmith if you aren't using a language supported by one of the LangSmith SDK's (python and JS/TS).

You then created a helper class to log nested runs (similar to OTel spans) to take advantage of LangSmith's full trace tree debugging.