Prompt Versioning

Using the "latest" version of a prompt in production can introduce unforeseen issues. The LangChain hub offers version-specific prompt pulling to enhance deployment consistency.

In this tutorial, we'll illustrate this with a RetrievalQA chain. You'll initialize it using a particular prompt version from the hub. This tutorial builds upon the RetrievalQA Chain example.

Here's the central takeaway: For stable production deployments, specify a prompt's commit hash instead of defaulting to the 'latest'. This is done by appending the 'version' tag to the prompt ID.

from langchain import hub

hub.pull(f"{handle}/{prompt-repo}:{version}")

Prerequisites

Ensure you have a LangSmith account and an API key for your organization. If you're new, see the docs for setup guidance.

# %pip install -U langchain langchainhub --quiet

import os

os.environ[

"LANGCHAIN_ENDPOINT"

] = "https://api.smith.langchain.com" # Update with your API URL if using a hosted instance of Langsmith.

os.environ["LANGCHAIN_API_KEY"] = "YOUR API KEY" # Update with your API key

os.environ[

"LANGCHAIN_HUB_API_URL"

] = "https://api.hub.langchain.com" # Update with your API URL if using a hosted instance of Langsmith.

os.environ["LANGCHAIN_HUB_API_KEY"] = "YOUR API KEY" # Update with your Hub API key

1. Load prompt

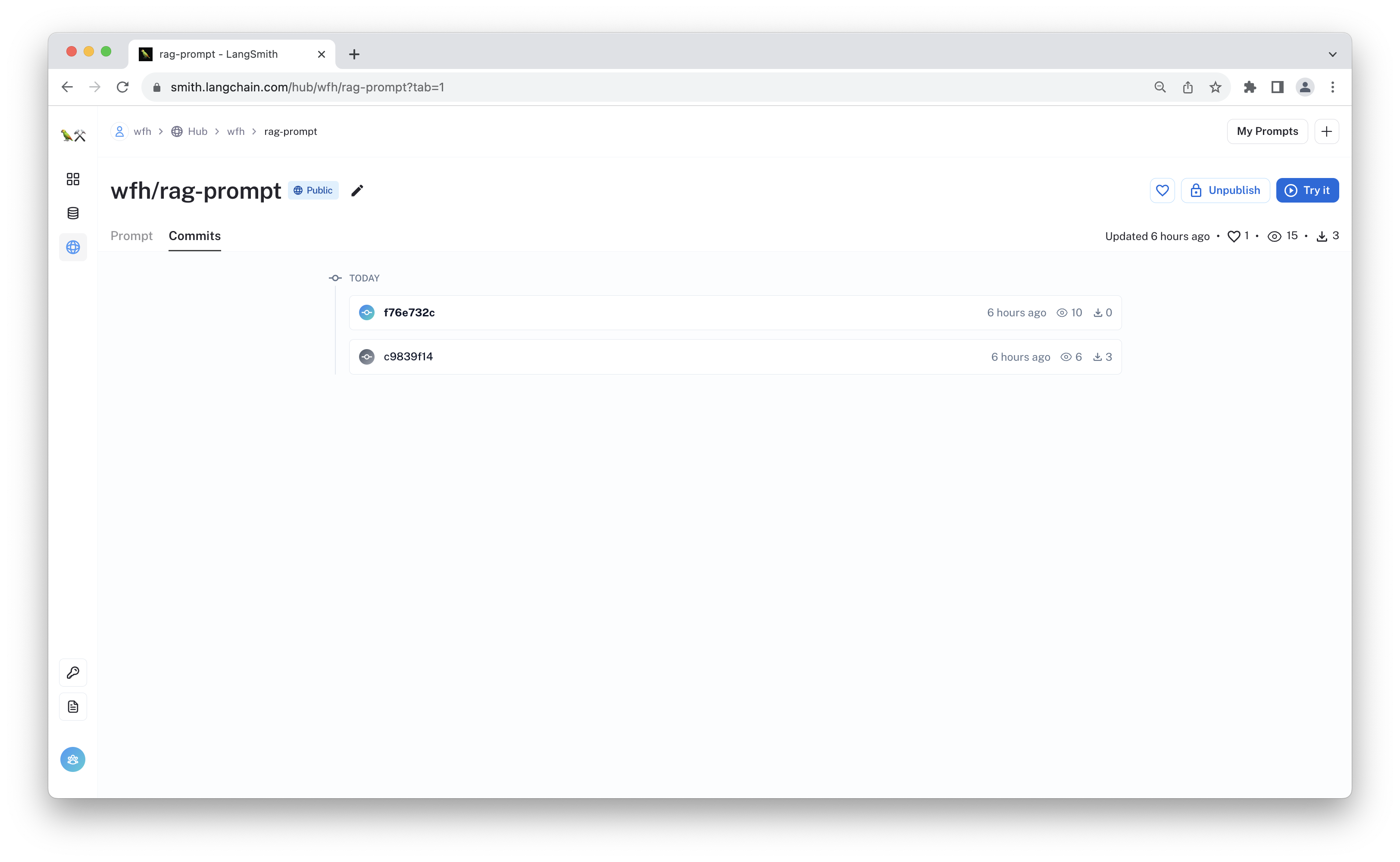

Each time you push to a given prompt "repo", the new version is saved with a commit hash so you can track the prompt's lineage. By default, pulling from the repo loads the latest version of the prompt into memory. However, if you want to load a specific version, you can do so by including the hash at the end of the prompt name. For instance, let's load the rag-prompt with version c9839f14 below:

from langchain import hub

handle = "wfh"

version = "c9839f14"

prompt = hub.pull(

f"{handle}/rag-prompt:{version}", api_url="https://api.hub.langchain.com"

)

2. Configure Chain

With the correct version of the prompt loaded, we can define our retrieval QA chain.

We will start with the retriever definition. While the specifics aren't important to this tutorial, you can learn more about Q&A in LangChain by visiting the docs.

# Load docs

from langchain.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

# Split

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

# Store splits

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

vectorstore = Chroma.from_documents(documents=all_splits, embedding=OpenAIEmbeddings())

Below, initialize the qa_chain using the versioned prompt.

# RetrievalQA

from langchain.chains import RetrievalQA

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=vectorstore.as_retriever(),

chain_type_kwargs={"prompt": prompt}, # The prompt is added here

)

3. Run Chain

Now you can use the chain directly!

question = "What are the approaches to Task Decomposition?"

result = qa_chain.invoke({"query": question})

result["result"]

'The approaches to task decomposition include using LLM with simple prompting, task-specific instructions, and human inputs.'

Conclusion

In this example, you loaded a specific version of a prompt for your RetrievalQAChain. You or other contributors to your prompt repo can then continue to commit new versions without disrupting your deployment.

Prompt versioning is a simple, important function to use in your workflow to let you continue to experiment and collaborate without accidentally shipping an under-validated chain component.