Testing over a dataset

It's often useful to test your prompts over a dataset to understand how your model will behave in different scenarios. The Playground allows you to easily test your prompts over any of your LangSmith datasets.

Running your prompt

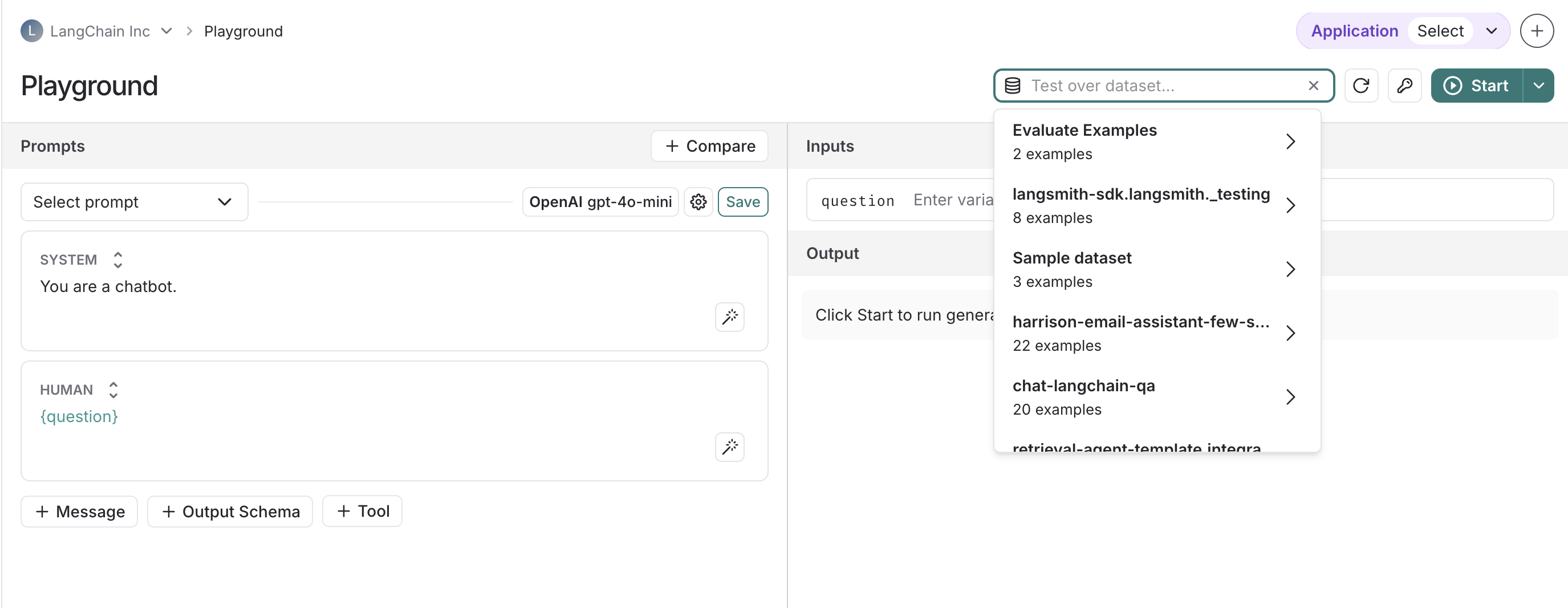

- Select the prompt you want to test. This can either be a saved or unsaved prompt. You can add multiple prompts to the playground by clicking "+ Compare" and they will all be run over the dataset.

- In the top right section of the playground, select your dataset from the dropdown. By default, the entire dataset is selected, but hovering over a dataset will allow you to select a dataset split (if one exists).

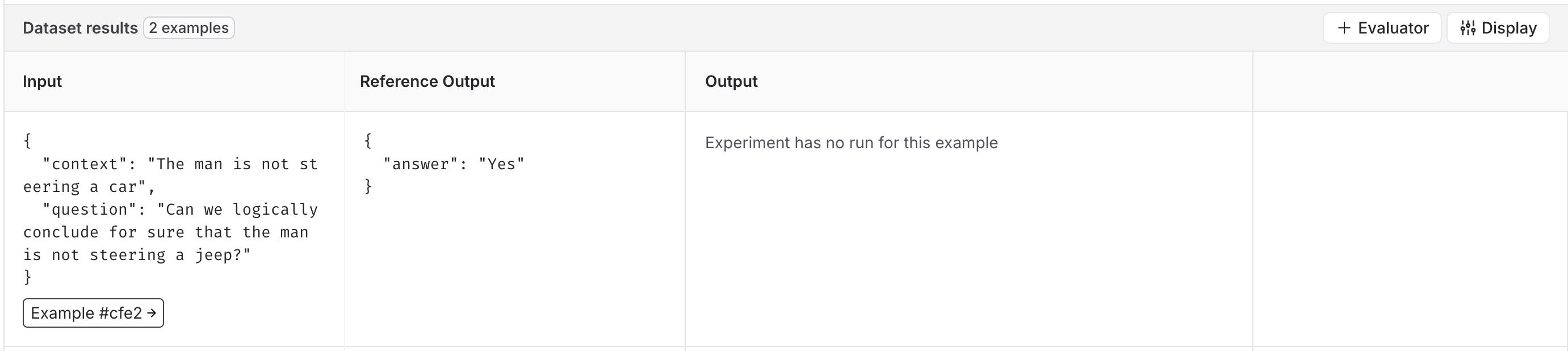

- This will load a table with the first 20 examples in the dataset. From here, you can click on "+ Evaluator" to configure evaluators for your experiment.

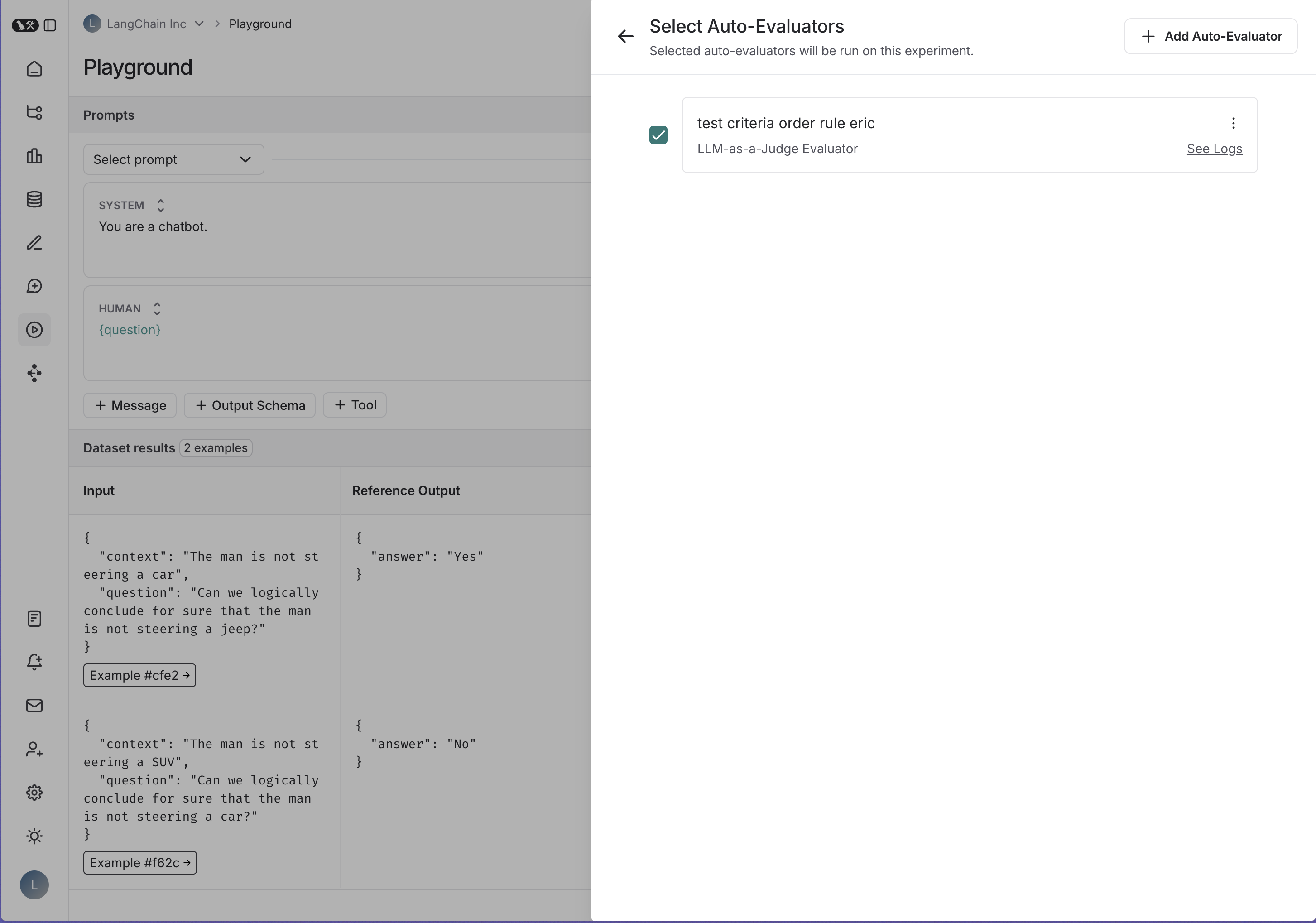

- This will open a pane where you can select the evaluators you want to use in this experiment. You can add multiple evaluators to your experiment or remove them by toggling the checkbox.

- Once you have selected your evaluators, click on the "Start" button in the playground to run your experiment. While all of the examples in the dataset will be run in your experiment, playground will only show the first 20. To view the full experiment results, you can click on the "View experiment" button in the results table.